Building The Best AI Investor

A test of open- vs closed-source AI models

The Deload explores my curiosities and experiments across AI, finance, and philosophy. If you haven’t subscribed, join nearly 2,000 readers:

Disclaimer. The Deload is a collection of my personal thoughts and ideas. My views here do not constitute investment advice. Content on the site is for educational purposes. The site does not represent the views of Deepwater Asset Management. I may reference companies in which Deepwater has an investment. See Deepwater’s full disclosures here.

Additionally, any Intelligent Alpha strategies referred to in writings on The Deload represent strategies tracked as indexes or private test portfolios that are not investable in either case. References to these strategies is for educational purposes as I explore how AI acts as an investor.

The Best

James Dyson built 5,127 prototypes of his dual cyclone vacuum until his first finished product.

Dyson called this the Edsonian approach in his first autobiography: “test, and test, and test until it works best.”

I’m on version 358 or so of using AI to challenge markets with Intelligent Alpha .

Version 358 doesn’t mean the product isn’t ready. Intelligent Alpha is quite ready based on performance so far. More than 80% of the AI-powered portfolios continue to outperform benchmarks since inception, but the product will never be finished. Great products never are.

“The Best” of anything is just the best so far. Free markets demand continued improvement to stay The Best. Persistent and rapid innovation is the only real moat of every great technology product.

Elon Musk has said as much:

"I think moats are lame. They're like nice in a sort of quaint, vestigial way, but if your only defense against invading armies is a moat, you will not last long. What matters is the pace of innovation. That is the fundamental determinant of competitiveness."

I want Intelligent Alpha to be The Best investor, period. Competing against indexes, human competition, other AI, it doesn’t matter. The only way to be The Best is to heed Dyson and Elon’s lead — innovate fast, innovate consistently.

The need for persistent innovation brings me to the open-source AI world.

Intelligent Alpha’s AI investment committee consists of three closed-source models — GPT from OpenAI, Gemini from Google, and Claude from Anthropic. Those three models are the most familiar and available to casual AI observers as they power chat interfaces like ChatGPT, Bard, and Claude. That’s why I started with them as a novice AI experimenter.

Now that I’m a more experienced AI experimenter, I realize there’s an entire group of open-source AI models I’ve ignored for stock picking. Hugging Face offers over 460,000 open-source models on its platform. With so many models to choose from, there have to be some that are good at investing, right?

Let’s find out.

Open Source AI Stock Pickers

Hugging Face’s LLM Leaderboard ranks open-source AI models based on various technical benchmarks. Three models dominate the Leaderboard — Meta’s Llama 2, Mistral’s Mixtral, and Upstage’s Solar — so, I tested those as stock pickers.

Llama is the most downloaded open-source AI model. Meta said Llama was downloaded over 30 million times as of September 2023 with 10 million downloads in the last month. Llama 2, the current version, comes in several flavors optimized around parameter count and function. I used Llama 2 70B for the stock picking experiment, the most powerful flavor.

Mixtral 8x7B is arguably the hottest open source model right now. Mixtral uses a technique called “mixture of experts” (MoE), which enhances the efficiency of the model. Whereas Llama 2 uses the entirety of its 70 billion parameters as it parses a user input, Mixtral routes input to a subset of its 47 billion parameters that the model believes can handle the request effectively. It’s like using only the most relevant part of your brain to handle a task while shutting off the non-engaged but still neurologically costly parts that aren’t working on the task.

SOLAR 10.7B uses a technique called depth up-scaling (DUS) that involves copying a model, shearing off some layers of original and copy, merging the sheared models, and then doing additional training on the merged model. The idea is that it adds scale to a model without the complexity of MoE. I think the equivalent brain analogy would be duplicating a brain, shaving off a different part of each brain, then merging them together into a bigger whole and reteaching the brain how to understand the world.

The advanced techniques of the latter two models are effective. Mistral says that Mixtral 8x7B meets or exceeds Llama 2 performance, and Upstage says that SOLAR 10.7B outperforms Mixtral 8x7B.

However…

We don’t care about technical AI benchmarks. The only benchmarks that really matter are whether AI models can do useful things for humans, like build outperforming stock portfolios.

To test whether open-source models are useful as stock pickers, I followed the same steps I used to test Grok last month:

Each AI is given the same dataset about large cap US stocks with a market cap >$15 billion.

Each AI is given the same initiating prompt that crafts investment philosophy and instructs the AI to choose a selection of up to 100 stocks it believes will perform best over the next year.

Each AI is asked to weight the stocks it selects based on the AI’s confidence of outperformance.

I use the individual weighted selection sets to compare the open-source AIs vs one another and vs the existing AI committee.

The results were not what I expected.

Open-source > Closed-source

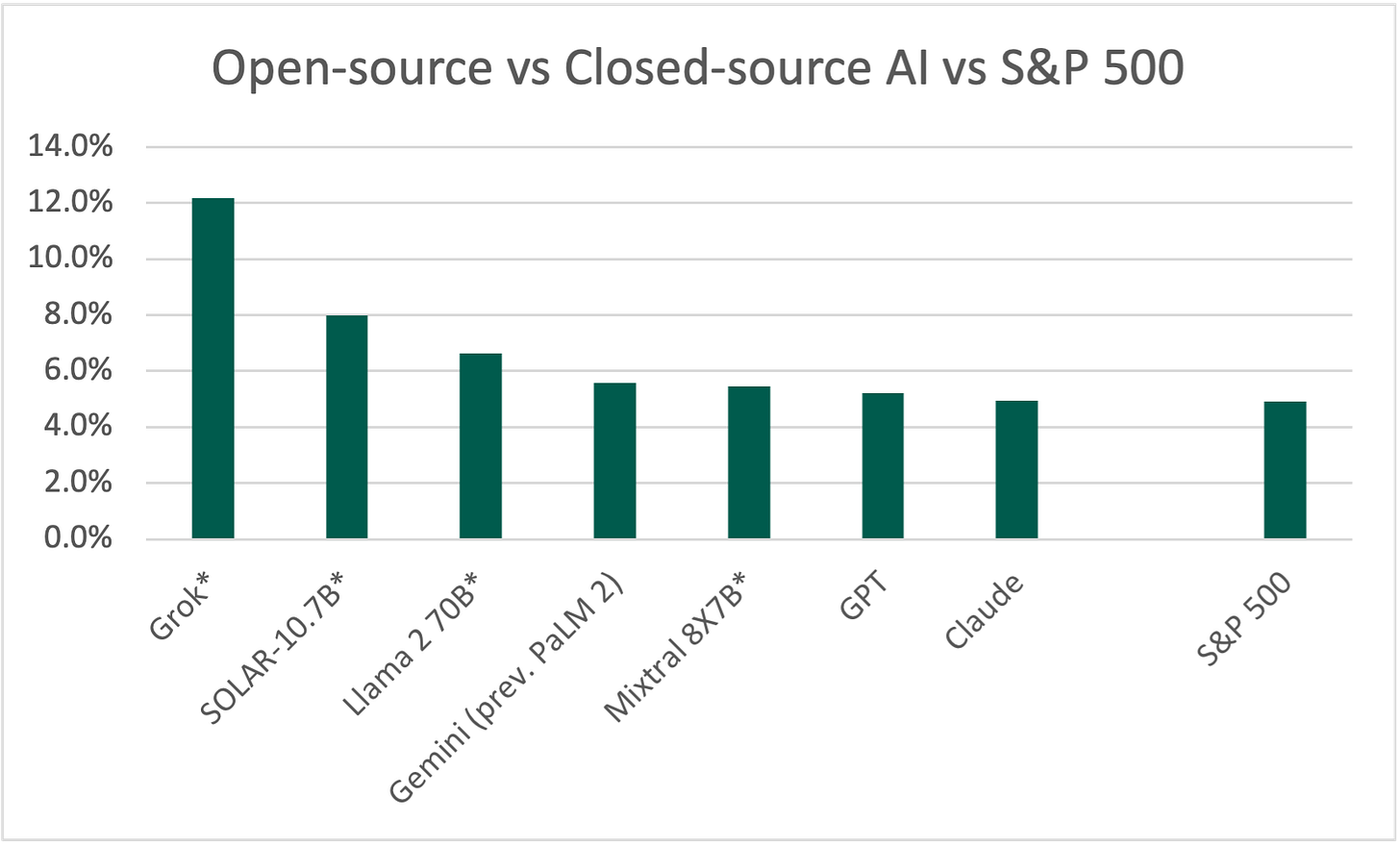

Open-source models proved better at stock picking overall than the closed-source AI.

Both SOLAR and Llama outperformed all the closed-source models. Mixtral beat GPT and Claude, and it fell short of matching Gemini by just 10 bps. Note that the results of the open-source models, as well as Grok’s, are backtested, while the data from Gemini/PaLM, GPT, and Claude is live. Time will crown the ultimate winner as it always does.

Beyond the raw results, the glaring lesson of the experiment is that conviction drives variance in investment outcomes.

The three top AI models have narrower portfolios in total holdings vs others, and they also all have greater concentration amongst their top five picks. Grok and SOLAR show the strongest conviction in their picks with over 50% of each portfolio allocated to its top five holdings. Third-place Llama was a bit more balanced with 18% in top five holdings. Gemini in fourth place was the most aggressive AI in the current Intelligent Alpha committee with almost 35% of its portfolio in the top five holdings.

That the most convicted AIs show the greatest outperformance should not come as a surprise. Active managers beat benchmarks by creating variance vs the benchmark. The clearest way to create variance is to concentrate on a few high conviction ideas that results in a portfolio drastically narrower than the benchmark.

Inverting the idea of convicted concentration, the more stocks and less concentration in a portfolio, the harder it is for the portfolio to create significant variance from its benchmark. We see this with GPT and Claude, which hold the most stocks overall with relatively even weights. The two models are only modestly ahead of the S&P 500.

Of all the AI contenders, Llama’s portfolio is the one I would choose if I had to pick one to invest all my money given the current market environment.

Whereas Grok and SOLAR are super aggressive and love megacap tech, and Mixtral and GPT are sort of milquetoast, the Llama portfolio balances concentration, conviction, and differentiated sector choice. Llama has almost 20% of the portfolio in its top five, it neither ignores nor maximizes megacap tech, and it has a strong bet on healthcare that could pay off in a choppy market.

Open Source Wins, Case Closed?

I expected closed-source models to outperform open given the vastly greater resources behind the closed AI companies. The rather commanding victory of open source begs the question: Is open-source AI naturally better for stock picking for some reason?

There are three explanations for why open source beat closed in stock picking:

Luck. The shorter the time period we assess in investing, the greater a factor luck plays. Open source could have just been lucky. The style they presented, aggressive and largely tech positive, was the right style for the environment. If luck is the reason for the results, the luck will fade over time, and maybe closed source will look better next time.

Limitation as a feature, not a bug. One theory I have is that the open-source models may be inherently more limited in understanding than the closed-source models. While Llama seemed to grasp the task instantly, SOLAR and Mixtral took some additional work to pick stocks. The limited understanding of the task may be an advantage. GPT, Claude, and Gemini all selected a portfolio of stocks close to the upper limit of the instruction, 100 total. All the open-source models selected fewer stocks for the portfolio. Whether open source built more concentrated portfolios because of conviction or because the models weren’t capable of selecting up toward the limit, we have another case of time will tell in future tests.

Some structural advantage. Maybe there’s something inherent to the open-source models that make them better stock pickers. It could be greater comfort with concentration or something else. Maybe the structural advantage is the limitation of understanding described above. Whatever the advantage, it may or may not persist. For the last time, only time will tell.

Time is the great equalizer in investing. Accidents and experience are normalized through time. Many humans and machines outperform markets for short periods. Few outperform for years on end. That’s why Buffett is Buffett.

As we accumulate more data about the various AI models as stock pickers over time, the best models should earn their way onto the Intelligent Alpha investment committee. Competition is the most reliable tool to reach optimal financial outcomes, and that’s the point of Intelligent Alpha. Moving forward, Intelligent Alpha’s three member AI investment committee will be established by merit rather than tradition.

I will continue to test open- and closed-source models, appointing the three that seem to be the best overall stock pickers to the committee as we accrue more data. Much of the choice will be objective and based on generated returns, portfolio construction, and apparent comprehension of the investment task. Some of it will be subjective as I lauded Llama as my favorite portfolio to date even though it doesn’t have the highest returns. Claude is the committee member at risk of being bounced, partly because of its relatively poor performance vs the field, but also because Claude has been a pain in the ass to work with lately…

The Opportunity for Open

When I started using AI to pick stocks in July 2023, Claude was the easiest of the AIs to work with.

Not any more. Claude’s borderline useless now.

First, Claude became annoyingly concerned about copyright issues after The NY Times lawsuit vs OpenAI. The last several times I used Claude, 80-90% of my prompts were met with objections about copyright concerns about data for which I pay for a license. Then Claude became annoyingly worried about the ethical ramifications of offering stock “advice.”

Anthropic’s differentiator is “Constitutional AI,” which is the company’s approach to instilling certain values into its model. AI alignment is an important concept. We don’t want models that act against the best interests of humanity, but there’s a careful balance between safety and usability.

OpenAI’s greatest competitive advantage may be that it seemingly has a less stuffy ethical compass than Anthropic or even Google. So, too, will many of the companies and experimenters that use open-source AI models that can be tuned as desired. It is always through pushing boundaries that we achieve progress, not by establishing new ones.

When a product is so “ethical” that it’s angering to use, no one is going to use it. I don’t want to argue with my tools about right or wrong or have to convince them that I’m not violating copyright laws with data to which I possess a license. I want the tool to do what I ask it. We all do.

If I had a choice, I would never use Claude again. The AI is that frustrating. Claude is still a member of the Intelligent Alpha investment committee, but perhaps not for long now that it has less prudish open-source competition.

Intelligent Alpha Performance Update

All but one of my 15 core Intelligent Alpha strategies are ahead of respective benchmarks since inception. The core AI-powered strategies are ahead by an average of 350 bps, and the median strategy is ahead by 340 bps.

For Intelligent Alpha to be “The Best” investor, it needs to sustain the performance we’ve seen so far. To sustain that performance, the process must continually improve, the committee must improve, and the results must even improve from already strong levels.

On to prototype 359, 360, 5,127, and as many as it takes beyond.

I really like your continued approach to always question and seek better results. I know I've been lucky over the years, but it's interesting that these new models chose fewer candidates that actually topped your old, closed committee. I'm just a layman investor, (and never trusted a financial advisor), but I have generally done better with fewer stocks, growth-oriented stocks, (which let's be honest, mostly tech) and timing!! I know we're not supposed to time the market, but there have been a couple of obvious times to get out! For perspective, I just turned 70 and have been investing for over 50 years, all solo. I am grateful to have had a "risky" approach that I was always "advised" not to do. We all know that there are a variety of ways to make money in this stock market, but I feel very familiar and comfortable with your new committee's approach. Keep up the great work.

Good morning Doug and HNY, lots of blessings in 2024! I enjoyed eating this note and the exploration with open-source models which continues to reinforce the idea of the pace of disintermediation in the asset management industry, and those who service them. As we have discussed previously, the likes of MSCI and S&P, to cite a couple. Are we evolving to a marketplace of sorts where investors subscribe to in order to design the investment strategies that allow them to achieve their long-term goals? Will this type of innovation, like the one you are pursuing with Intelligent Alpha, continue to put pricing pressure in the asset management industry that is still operating with a generalist-based model which struggles to deliver personalization? What about the size of investor base globally, will it expand further as a result of this type of innovation? I must admit Doug, I feel quite excited and energized about the prospects for the asset management industry and the innovation that we will continue to experience going forward. As you continue to explore open-source models, I wonder if you have heard of CrunchDAO and Numer.ai which have a crowd-source model for alpha generation, expanding the research capacity of asset managers. Happy Sunday!