The Deload explores my curiosities and experiments across AI, finance, and philosophy. If you haven’t subscribed, join nearly 2,000 readers:

Disclaimer. The Deload is a collection of my personal thoughts and ideas. My views here do not constitute investment advice. Content on the site is for educational purposes. The site does not represent the views of Deepwater Asset Management. I may reference companies in which Deepwater has an investment. See Deepwater’s full disclosures here.

Additionally, any Intelligent Alpha strategies referred to in writings on The Deload represent strategies tracked as indexes or private test portfolios that are not investable in either case. References to these strategies is for educational purposes as I explore how AI acts as an investor.

Jack Kendall is Building Artificial Brains

I learned more about AI in my most recent conversation with Jack Kendall than any other AI conversation I’ve had in the past year.

Jack is the CTO of Rain AI, a portfolio company at Deepwater. Rain is developing advanced AI-focused hardware that combines compute and memory to improve efficiency by orders of magnitude. The company’s vision is to create an artificial brain — hardware as efficient as the human brain.

Jack joined me for the most recent Deload Podcast to talk about the state of AI, what’s coming in 2024, AGI by 2025, and how hardware will contribute to the next major wave of gains in AI advancement. Here are my major takeaways.

Addressing the Skeptics of Deep Learning

Not everyone believes that deep learning will take us to “true intelligence.” Skeptics offer two main reasons:

Deep learning can’t do causal reasoning. Jack argues this has been debunked by Deepmind who showed they could extract causal information from models using reinforcement learning via various interventions. Jack offers the simple logic that you couldn’t have a conversation with ChatGPT if it couldn’t do some level of causal reasoning. Jack uses the example of uploading a picture of a girl holding a balloon and asking ChatGPT what would happen if you cut the strings of the balloon. I tried this exact example, and ChatGPT explained that the balloons would float away.

Deep learning can’t do symbolic reasoning. Symbolic reasoning is like understanding that dogs are mammals and mammals have fur so an unseen dog will have fur. Some symbolic reasoning can be done by transformer models but not generally. The pinnacle of symbolic reasoning is math, which GPT can’t currently do.

Jack believes that the breakthrough of Q* at OpenAI may be that it achieved the ability to do math.

Math will be the AI breakthrough of 2024. It will emerge slowly at the grade school level and eventually get to university level. AI’s mathematical ability may not need to exceed that level of performance. With the scale of AI, you can just run millions of instances of systems at university level intelligence to get breakthroughs. You don’t need superintelligence to get there.

AGI by 2025

Another skeptic’s argument about AI is that it’s just fancy autocomplete. This is overly reductive. AI models have a working “understanding” of the world, but it’s not the same as a human.

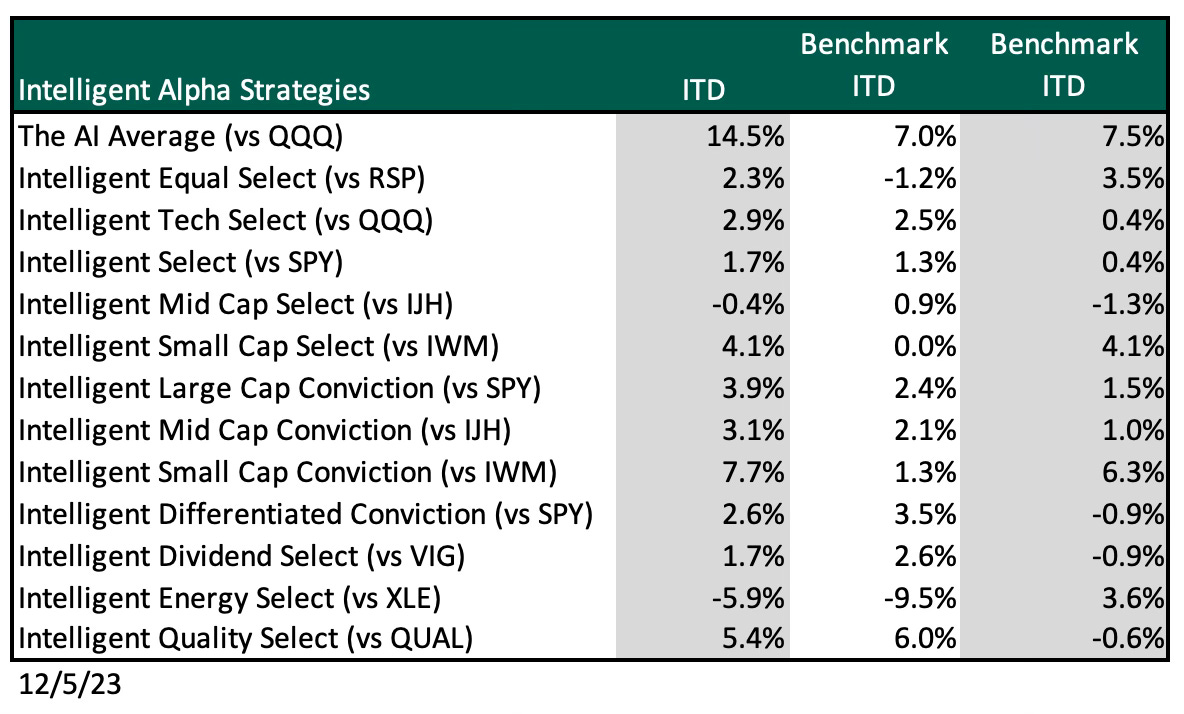

I’m convinced gen AI understands the world in some way from my experiments using AI to challenge markets. Nearly 70% of my Intelligent Alpha strategies are ahead of benchmarks since inception, many by 200-500 bps (performance update below).

The results of Intelligent Alpha have held up over months now, and I intend to run the strategies perpetually. The longer gen AI outperforms markets, a task most humans can’t achieve, the harder it is to argue they’re just autocompleting stock portfolios.

To the debate around fancy autocomplete, Jack offers an important distinction as we consider machine understanding and intelligence: Artificial general intelligence and human level intelligence are different things. Human level intelligence is human level intelligence. It self defines. AGI is different.

Jack uses a three-part separate definition for AGI:

A single network architecture that can in principle learn any combination of data modalities - e.g. speech, vision, language. We have this now.

The ability to use prior experience to learn new things rapidly. That’s casual understanding.

The ability to reason about things and perform tasks it hasn’t seen before. That’s symbolic reasoning.

By this definition, Jack believes we will achieve AGI by 2025.

If models can do math in 2024, then we have all the pieces of intelligence. Not necessarily human level intelligence but something close. Then the problem of intelligence becomes a matter of scaling models and datasets to get to AGI.

The Hardware Bottleneck in AI Advancement

Jack believes we’ve hit a bit of a plateau in AI around compute. Models were doubling every 3.5 months over the past six years. That has to slow. Moore’s Law has also ended. We don’t have exponential increases in price/performance in GPUs now. The size of AI systems won’t keep accelerating like we’ve seen, so we need new ways to make significant gains.

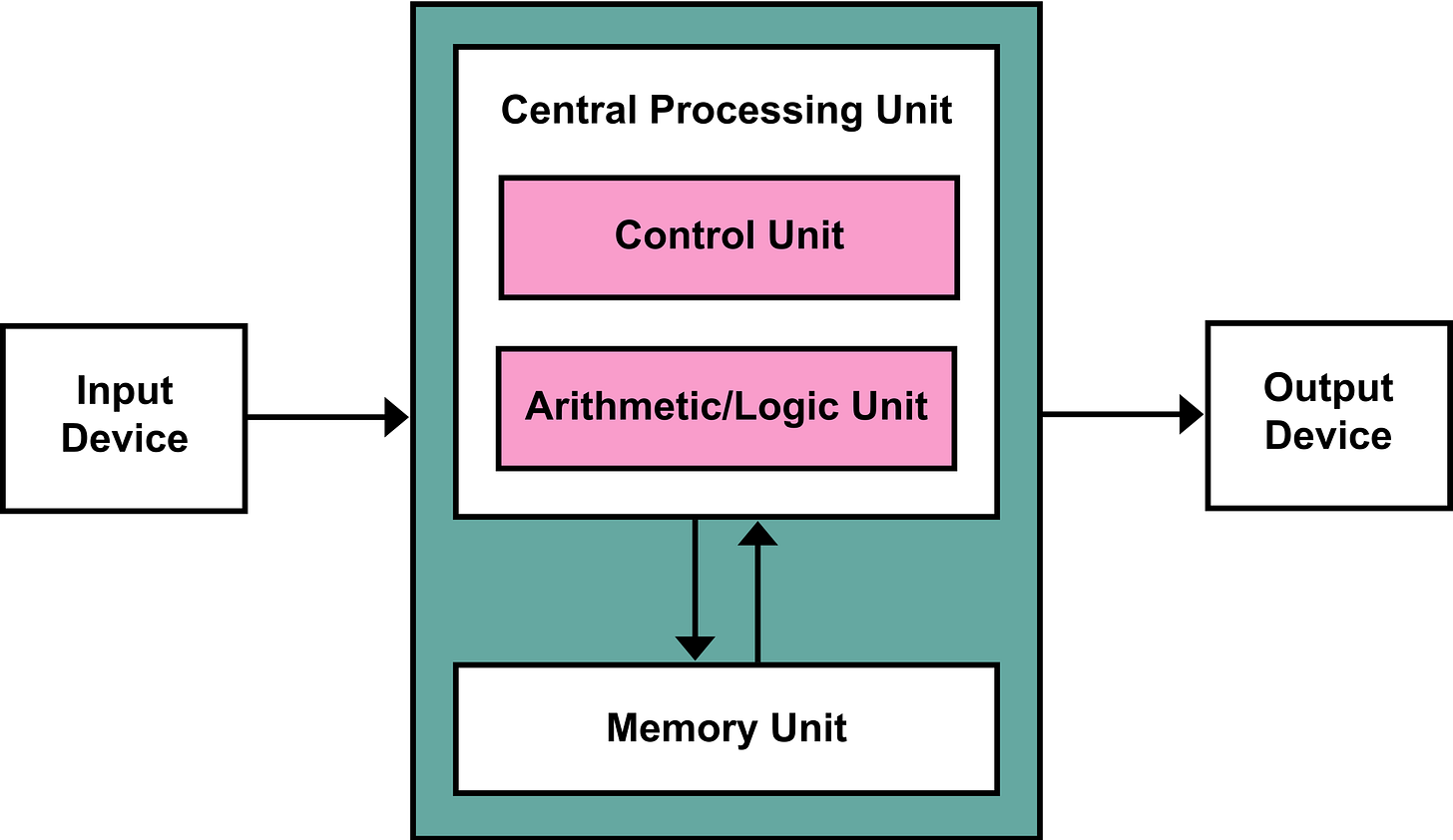

The big limiting factor in AI hardware is that brains and GPUs are nothing alike. CPUs and GPUs are built on the von Neumann architecture which separates compute and memory. Compute and memory is connected with a narrow bus, creating a bottleneck in bringing data to the processor. In the human brain, memory and processing are unified.

Rain is building a processor that does compute in memory — an artificial brain. Rain’s first product is targeted at edge devices like drones or wearables, which will give those devices the ability to adapt on the fly. We can’t have intelligent autonomous systems without an ability to learn on the edge. Longer term, Rain aims to build significantly more efficient infrastructure for AI broadly, bringing the necessary improvements in power consumption and latency we need to go beyond AGI.

Jack believes that better model architectures can get us 1-2 orders of magnitude improvement in AI performance, but hardware has 4-6 orders of magnitude improvement to get to the human brain. That’s the future of AI compute and AI by extension.

Podcast Time Stamps

1:00 AI research to commercialization

6:00 The advantages of being first in AI

7:00 What breakthrough might OpenAI have achieved?

15:00 Jack’s definition of AGI

19:00 what governments should do to protect people from job loss

20:30 The big topic for AI in 2025 — mathematics

24:30 AI doesn’t understand like a human, but it understands

28:00 How will AI make new discoveries?

33:00 Rain’s work combining compute and memory in novel processors

38:00 Dynamic learning machines depend on intelligent at the edge

40:30 What’s coming from Rain

Disclaimer: My views here do not constitute investment advice. They are for educational purposes only. My firm, Deepwater Asset Management, may hold positions in securities I write about. See our full disclaimer.

Intelligent Alpha Fixes Human Flaws in Investing

Only 10% of actively managed funds have outperformed the S&P 500 over the past 15 years.

Beating the market is hard for anyone, which makes it all the more notable how well generative AI has performed against the market so far in six months of testing.

AI is winning across almost 70% of the strategies I track, oftentimes convincingly. My experience with Intelligent Alpha corroborates Jack’s view from the podcast that AI doesn’t need to understand the world like a human to offer intelligent output.

I believe one of the major reasons that generative AI is winning now against markets and humans, and will continue to win in the future, is that AI doesn’t suffer from the investment flaws of the typical person. Greed and fear are contagious. AI is immune. Emotion dictates investment decisions. AI is immune. Impatience demands action. AI is immune.

I can only imagine how much more effective AI models might be with symbolic reasoning capabilities coming in 2024.

Follow the progress of Intelligent Indices on Thematic.